date: 2022-10-26

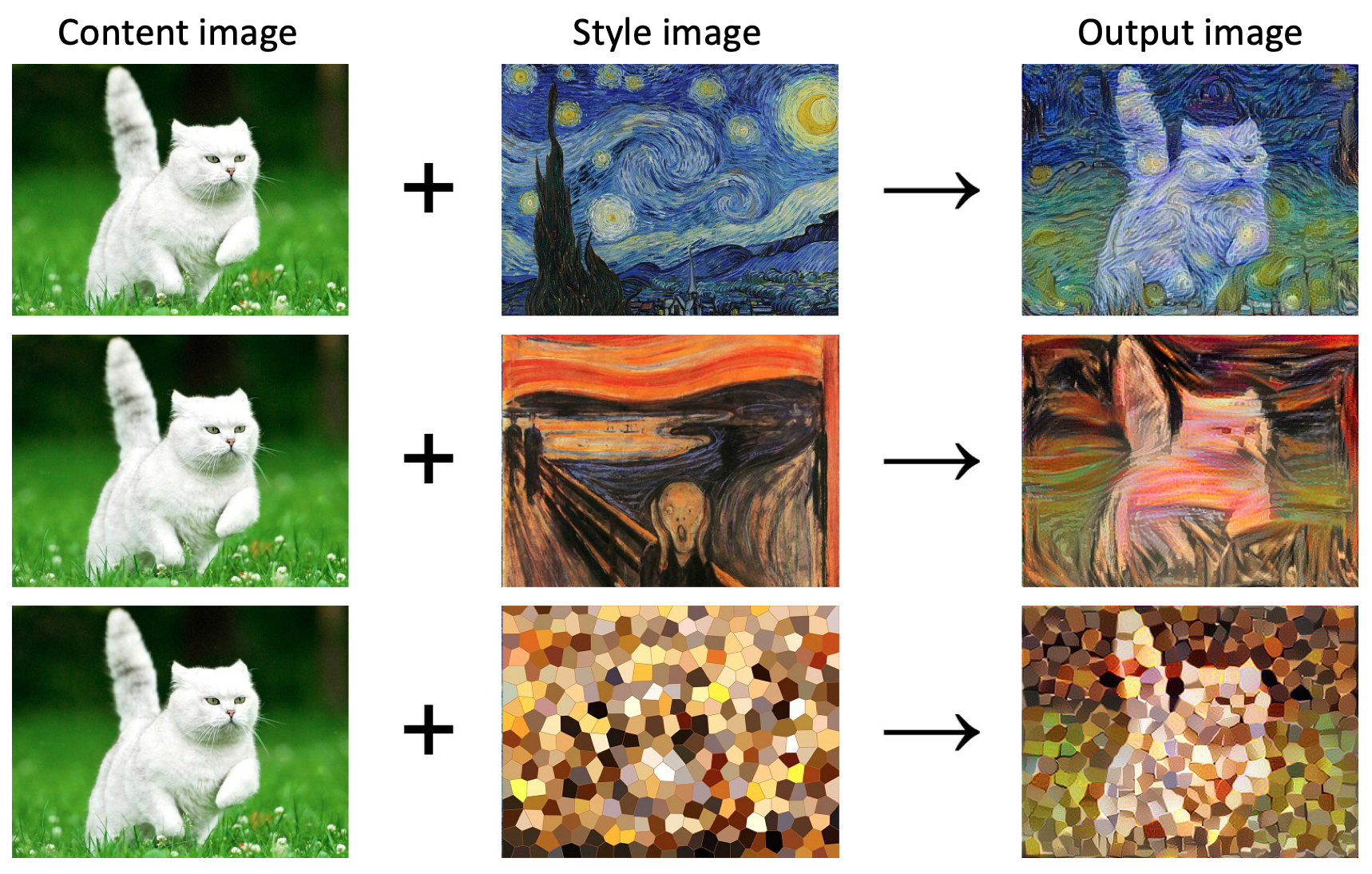

Previously, style transfer aims to transform a content image by transferring the semantic texture of a style image. This existing neural style transfer methods require reference style images to transfer texture information of style images to content images.

However, these methods have limitation that they require a reference style image to change the texture of the content image.

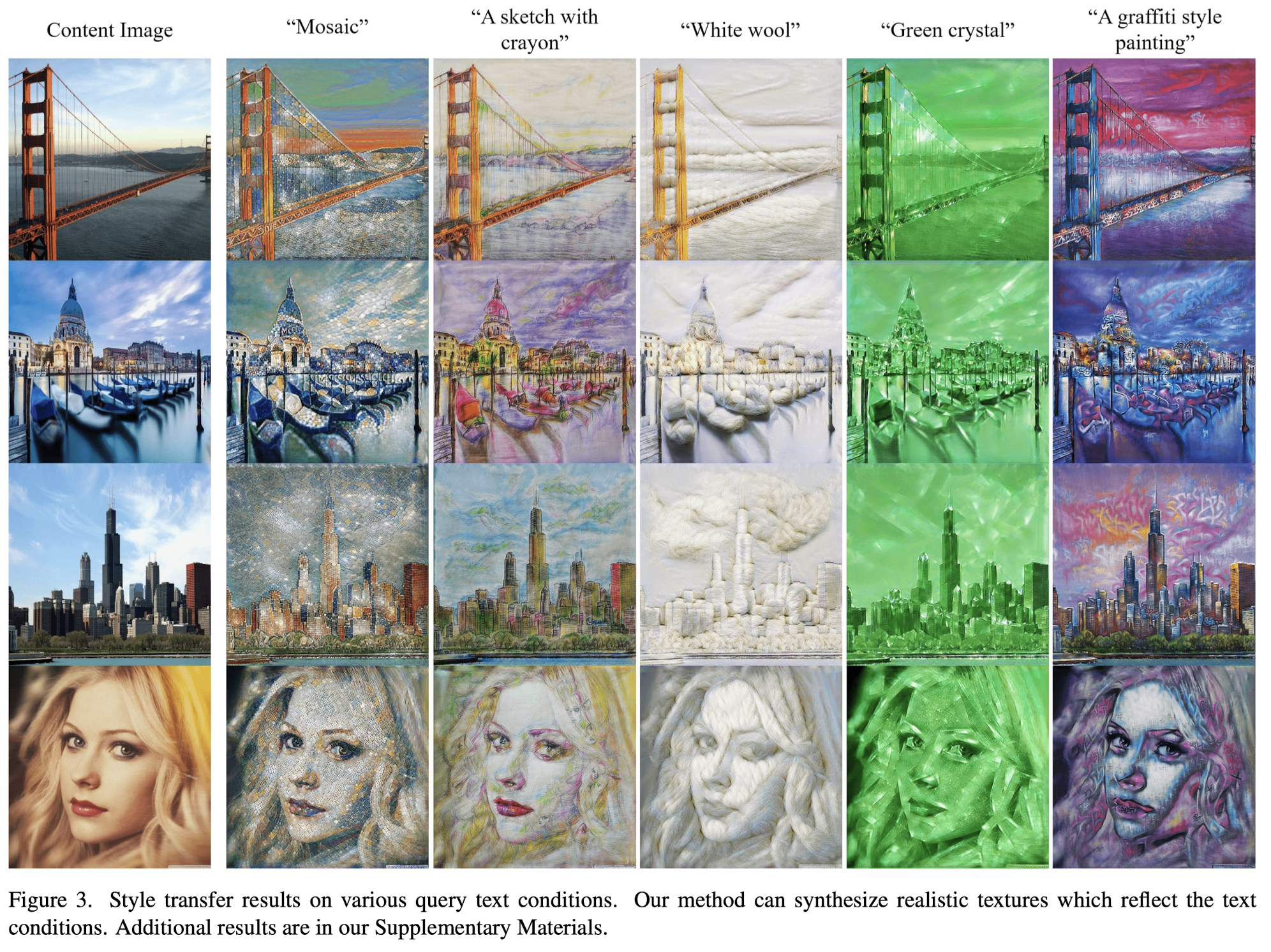

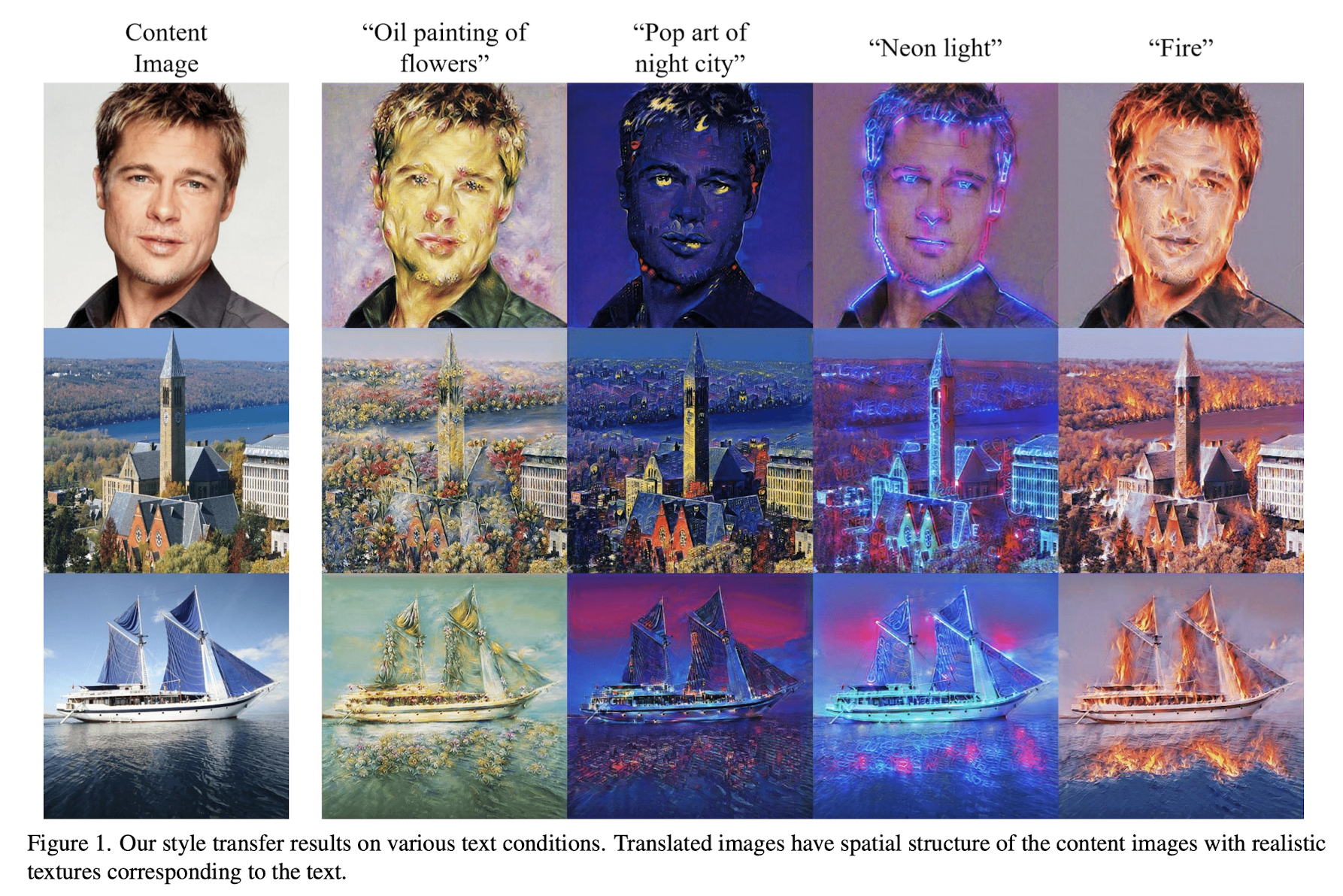

This paper proposes a new framework that enables style transfer without a reference style image, but with a text description of the desired style.

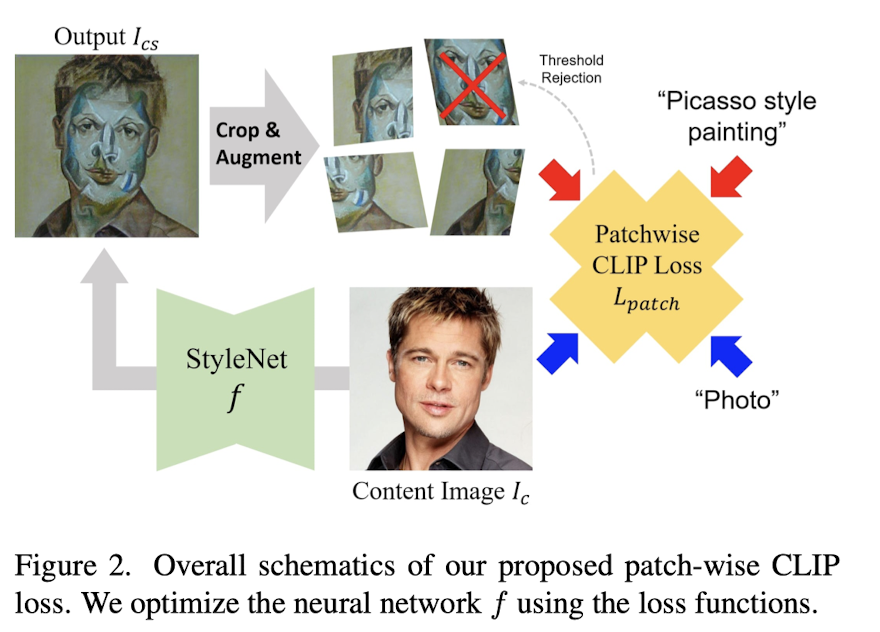

Content image is transformed by lightweight CNN to follow the text condition by matching the similarity betweent the CLIP model output of transferred image and the text condition.

- sample patches of the output image

- apply augmentation with different perspective views

- obtain CLIP loss by calculating the similarity between the query text condition and the processed patches

Results